OpenAI’s Sora is one of the most exciting breakthroughs in generative AI, capable of producing short, high-quality videos directly from text prompts. With the ability to generate clips up to a minute long, Sora makes it possible for developers, creators, and businesses to explore video generation without expensive production setups.

How Does Sora Work?

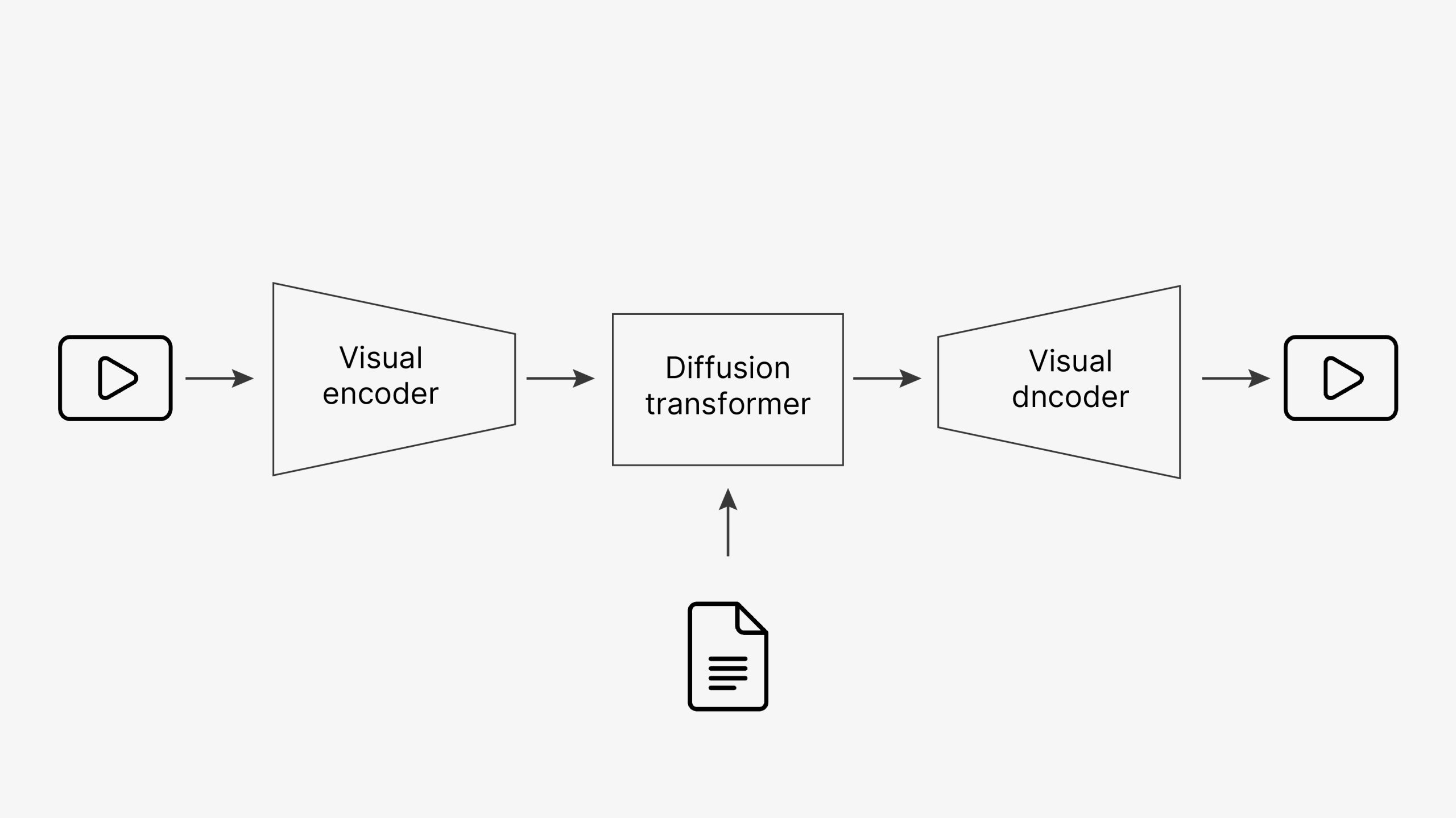

Sora uses diffusion-based architectures similar to image generators but extended into the video domain. It learns both spatial and temporal patterns, allowing it to create consistent motion and realistic transitions across frames.

Getting Started with Sora

At the time of writing, Sora is accessible via OpenAI’s API. To get started:

pip install openai python-dotenvimport openai

prompt = "A cinematic shot of a futuristic city at sunset, with flying cars and glowing neon lights"

response = openai.Video.create(

model="sora-1.0",

prompt=prompt,

duration=10 # seconds

)

# Save video

with open("output.mp4", "wb") as f:

f.write(response["data"][0]["b64_json"].decode("base64"))Building a Text-to-Video Pipeline

You can integrate Sora into a content creation workflow. A typical pipeline looks like this:

- Accept user input (text prompt)

- Send prompt to Sora API

- Receive generated frames or video clip

- Optionally add background music, captions, or transitions

- Deliver the final video file

Example Workflow

# Example pseudo-code for pipeline

prompt = "A time-lapse of blooming flowers in a garden"

video = sora.generate(prompt, duration=15)

# Add captions or audio overlay

final_video = add_audio_and_captions(video, audio_track="music.mp3")

save(final_video, "flowers.mp4")Creative Use Cases

- Advertising: Generate short product videos for social media campaigns.

- Education: Create quick visual explainers for complex topics.

- Entertainment: Prototype animated scenes or storyboards for films and games.

- Personal Projects: Turn imaginative prompts into fun video clips.

Best Practices

- Write detailed, structured prompts for better control over output.

- Use shorter durations for higher-quality results; longer clips may introduce artifacts.

- Experiment with style modifiers (cinematic, anime, 3D render, etc.).

- Combine Sora with other tools like text-to-speech for narration or music generation for soundtracks.

Challenges and Limitations

While Sora is powerful, it has limitations:

- Complex motion (e.g., many moving objects) may cause artifacts.

- Video length is limited (currently around 1 minute).

- High compute cost for generating longer or higher-resolution clips.

Conclusion

Text-to-video is quickly becoming a reality thanks to models like Sora. For developers and creators, this opens up new opportunities in content creation, education, entertainment, and beyond. By integrating Sora into your workflows, you can bring ideas to life visually without traditional video production overhead.

"Video generation is the next big leap in generative AI, bringing stories and concepts to life in motion." - Ashish Gore

If you’d like to collaborate on building applications with text-to-video models like Sora, feel free to reach out through my contact information.