AI is no longer experimental in financial institutions; it is deeply embedded in credit risk models, trading algorithms, fraud detection, and customer support. With this adoption comes responsibility. Regulators and customers alike expect transparency, fairness, and accountability. Responsible AI governance provides the framework to meet these expectations while fostering innovation. In this post, I’ll explore what responsible AI governance means for financial services and how to implement it effectively.

Why governance matters

- High stakes decisions. Credit approvals, loan pricing, and fraud detection directly impact people’s lives.

- Regulatory scrutiny. Laws like the EU AI Act, GDPR, and local banking guidelines demand explainability and fairness.

- Reputation risk. Unchecked AI failures can erode public trust and trigger financial penalties.

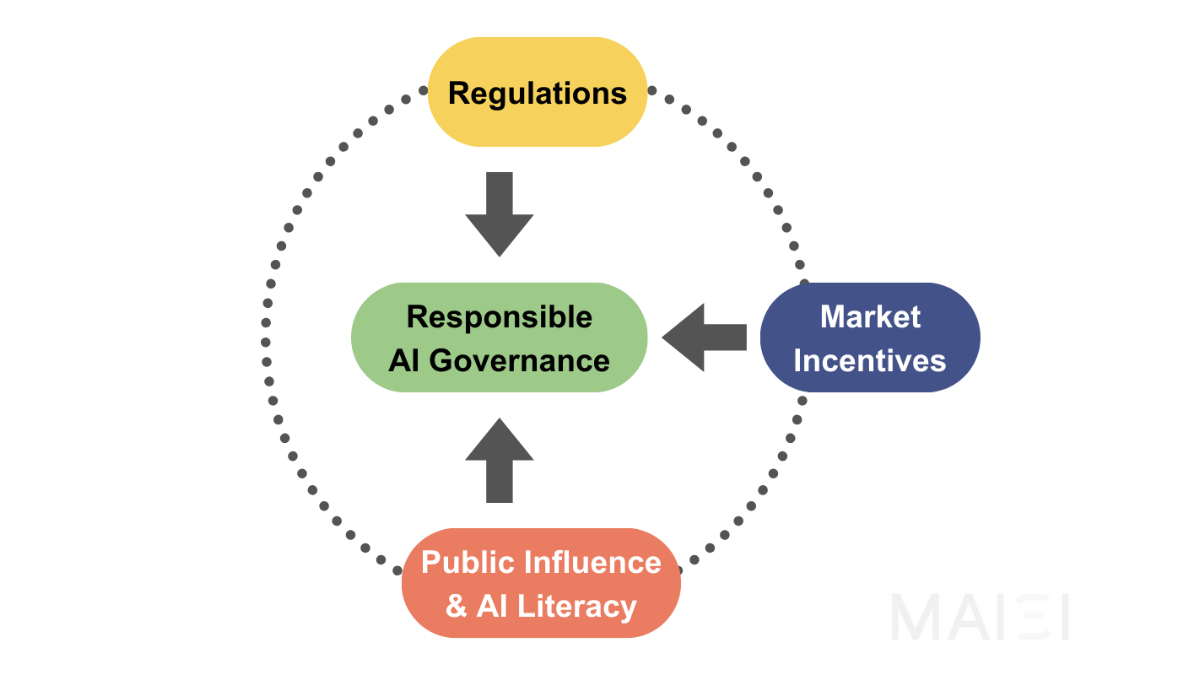

Core pillars of responsible AI governance

- Accountability. Clear ownership of AI models, with defined roles for data scientists, compliance officers, and executives.

- Transparency. Ensuring decisions can be explained to regulators and customers in understandable terms.

- Fairness. Monitoring models for discriminatory outcomes across demographic groups.

- Privacy & security. Safeguarding customer data with encryption, minimization, and secure environments.

- Monitoring. Continuous oversight of models for drift, bias, and operational risk.

Frameworks to adopt

Several established frameworks can help financial institutions operationalize responsible AI:

- NIST AI Risk Management Framework. Focused on trustworthiness and risk assessment.

- OECD AI Principles. Promote human-centric, fair, and transparent AI practices globally.

- Responsible AI principles by RBI/SEBI. Increasingly referenced in Indian financial regulations.

Practical steps for implementation

- Set up an AI governance board with cross-functional stakeholders.

- Develop an AI model inventory to track models in production.

- Adopt model risk management (MRM) practices aligned with Basel/ECB guidelines.

- Use explainability tools like SHAP or LIME for high-impact models.

- Conduct regular bias and fairness audits across customer segments.

Hands-on: explainability with SHAP

import shap

explainer = shap.TreeExplainer(model)

shap_values = explainer(X_test)

# Visualize feature importance

shap.summary_plot(shap_values, X_test)This approach allows compliance teams to interpret predictions of tree-based models in credit or fraud detection.

Case study: AI governance in a retail bank

A retail bank introduced an AI governance framework after facing regulator queries on credit score fairness. They created an AI model registry, mandated SHAP explainability for all risk models, and introduced quarterly audits. Result: improved regulator confidence, reduced internal friction, and a roadmap for scaling AI responsibly.

Challenges in AI governance

- Cultural resistance. Business teams may view governance as slowing down innovation.

- Skill gaps. Compliance teams may lack technical expertise to oversee AI models.

- Tooling limitations. Current monitoring tools may not cover bias, drift, and explainability comprehensively.

Best practices

- Establish governance early, not after issues arise.

- Invest in training for compliance and business teams.

- Use automated model monitoring tools integrated with MLOps pipelines.

- Balance innovation with accountability — governance should enable, not stifle.

Conclusion

Responsible AI governance is not optional in finance; it is foundational. Institutions that build governance frameworks aligned with regulation and ethics will be better positioned to innovate responsibly and earn long-term trust. AI can be a competitive advantage, but only if governed responsibly.

"Good governance transforms AI from a risk to an opportunity." – Ashish Gore

If you’d like, I can create a governance checklist template specifically for financial institutions, covering people, processes, and technology.