Humans understand the world using multiple senses — we listen, read, watch, and speak. For AI systems to approach human-level understanding, they must also combine multiple modalities like text, images, audio, and video. This is the promise of multi-modal AI. In this post, I’ll walk through the foundations of multi-modal AI, key architectures, real-world applications, and the challenges that remain.

What is multi-modal AI?

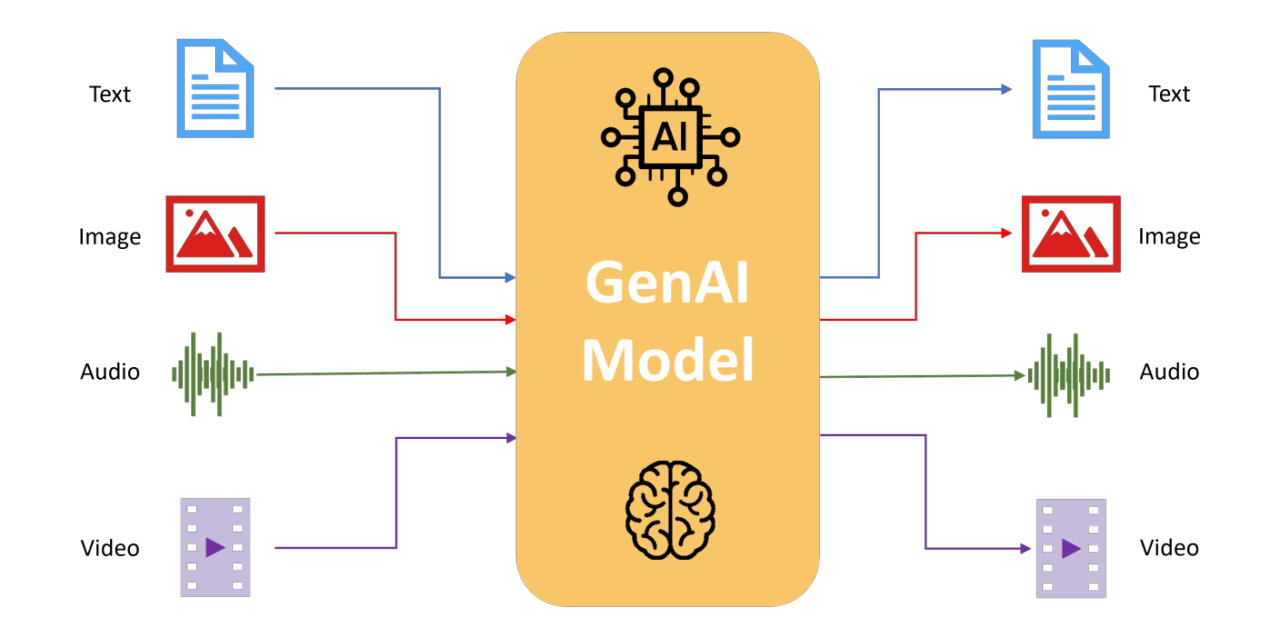

Multi-modal AI refers to models that can process and integrate different types of data — such as natural language, visual inputs, and speech — to generate richer and more accurate outputs. Instead of training separate models for each modality, multi-modal systems combine them into a shared representation.

Why multi-modal learning matters

- Contextual richness. Text alone can be ambiguous; combining it with vision or speech adds clarity.

- Human-like interaction. Enables natural experiences like voice-based assistants that understand visual context.

- Cross-modal transfer. Models trained in one modality can improve performance in another.

- Broader applications. Unlocks tasks like video captioning, visual question answering, and multi-sensor analysis.

Core architectures

Early fusion

Data from multiple modalities is combined at the input stage before being passed through the model. Example: concatenating embeddings of text and image features.

Late fusion

Each modality is processed independently, and the results are merged at the decision stage. This is simpler but may miss cross-modal interactions.

Joint representation models

Use deep learning to create a shared latent space across modalities. CLIP by OpenAI is a prime example, aligning text and images.

Transformers for multi-modal learning

Transformer architectures, originally developed for NLP, are now widely used for multi-modal tasks. Examples include BLIP-2, Flamingo, and GPT-4V, which combine vision encoders with language models.

Hands-on example: CLIP for image-text alignment

import torch

import clip

from PIL import Image

model, preprocess = clip.load("ViT-B/32", device="cpu")

image = preprocess(Image.open("finance_chart.png")).unsqueeze(0)

text = clip.tokenize(["A stock market chart", "A cat sitting on a chair"])

with torch.no_grad():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

similarity = (image_features @ text_features.T).softmax(dim=-1)

print(similarity)This example shows how CLIP can link natural language with visual content, a cornerstone of multi-modal AI.

Applications of multi-modal AI

- Healthcare. Combining medical images with clinical notes for better diagnosis.

- Finance. Analyzing earnings calls (speech + text) along with charts for investment insights.

- Retail. Personalized recommendations using browsing behavior, product images, and customer reviews.

- Autonomous vehicles. Fusing data from cameras, LiDAR, and text-based maps for safer navigation.

- Assistive tech. Tools for visually impaired users combining speech recognition with image captioning.

Challenges in multi-modal AI

- Data alignment. Ensuring text, audio, and images are synchronized correctly.

- Compute intensity. Training multi-modal transformers requires large-scale infrastructure.

- Bias propagation. Bias in one modality can spill over and amplify across others.

- Evaluation complexity. Measuring performance across modalities is less straightforward than single-task models.

The road ahead

Future research is pushing toward general-purpose multi-modal models that handle any combination of inputs seamlessly. GPT-4V, Google Gemini, and Meta’s ImageBind are early steps in this direction. The goal is to move beyond single-sense AI into systems that “see, hear, and understand” together — much like humans.

Conclusion

Multi-modal AI marks a step closer to human-like intelligence. By combining text, vision, and speech, these systems open new frontiers for interaction and insight. But the road is challenging, requiring careful work on alignment, fairness, and efficiency. For practitioners, now is the time to explore how multi-modal learning can enrich their domain — whether finance, healthcare, or beyond.

"Multi-modal AI doesn’t just process information — it experiences it." – Ashish Gore

If you’re interested, I can create a separate post showcasing a hands-on project: building a simple multi-modal question answering system using Hugging Face Transformers and CLIP.