As AI agents and complex RAG systems move into production, we're hitting a hidden bottleneck: context management. Every developer builds their own ad-hoc system for handling chat history, retrieved documents, and agent state. This creates brittle, non-interoperable systems that are difficult to debug and scale. It's time to think bigger. This post proposes a vision for a new standard: the Managed Context Protocol (MCP).

What is the Managed Context Protocol (MCP)?

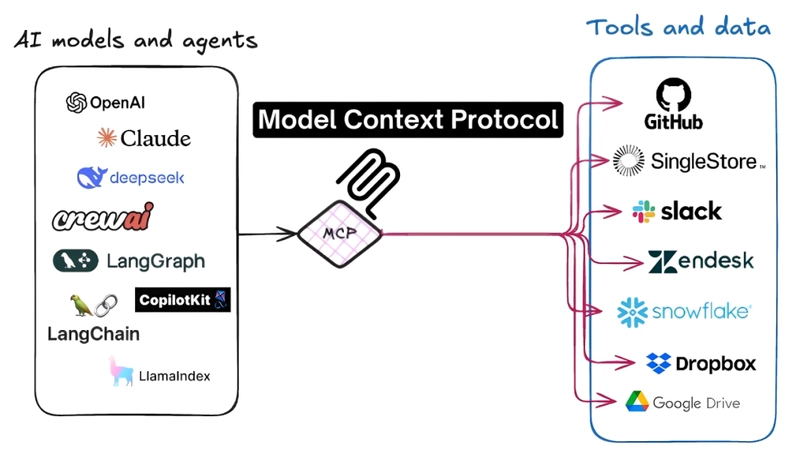

MCP is a conceptual framework for a standardized way to structure, version, and exchange context information for AI models and agents. Think of it as an "HTTP for AI context"—a common language that allows different AI systems to communicate their state and knowledge to each other seamlessly. An MCP "packet" would contain clearly defined layers for different types of context.

Why Do We Need a Protocol Like MCP?

A standardized protocol would unlock several key benefits for the AI ecosystem:

- Interoperability: An agent built in one framework could pass its complete state to an agent in another, enabling more complex, multi-agent workflows.

- Debugging & Auditing: With a structured, versioned context, you can precisely replay the state of an agent to understand why it made a certain decision. This is a game-changer for reliability and compliance.

- Stateful AI: MCP would provide a robust mechanism for saving, loading, and migrating the state of complex agents, making them more resilient.

- Efficiency: A common standard would lead to optimized tools and libraries specifically designed for handling context, improving performance across the board.

A Conceptual MCP Payload

So, what would an MCP packet actually look like? Here is a conceptual JSON representation for a research assistant agent that has retrieved a document and answered a user's question.

// A conceptual example of a Managed Context Protocol (MCP) payload

{

"mcp_version": "1.0",

"context_id": "ctx-a1b2c3d4-e5f6-7890-1234-567890abcdef",

"timestamp": "2025-06-05T14:30:00Z",

"metadata": {

"user_id": "user-1138",

"session_id": "sess-xyz789",

"model_used": "claude-3-opus"

},

"context_layers": {

"system_prompt": {

"content": "You are a helpful research assistant. Summarize provided documents.",

"priority": 1

},

"retrieved_documents": [

{

"doc_id": "doc-finance-q1-report",

"source": "internal_db://reports/2025/q1.pdf",

"content_snippet": "Q1 2025 revenue was $15M, a 10% increase...",

"retrieval_score": 0.92

}

],

"chat_history": [

{ "role": "user", "content": "What was the Q1 revenue?" },

{ "role": "assistant", "content": "Based on the Q1 report, the revenue was $15 million." }

],

"agent_scratchpad": {

"thought": "The user's query is answered. I will wait for the next query.",

"action": "WaitForUserInput"

}

}

}

This structured format clearly separates different types of context, includes vital metadata, and creates a complete, replayable snapshot of the agent's state.

The Path to Adoption

For a standard like MCP to become a reality, it would require collaboration between the major AI labs and the open-source community. Similar to how the ONNX format created model interoperability, MCP could create *context* interoperability. A joint effort to define the schema and build supporting libraries would be a massive step forward for the maturity of the AI engineering field.

Conclusion

While foundation models get most of the attention, the "plumbing" that connects them—context management—is equally critical for building robust applications. The current, fragmented approach is not sustainable. A standard like the Managed Context Protocol (MCP) offers a vision for a future where AI systems are more reliable, auditable, and capable of collaborating in complex ways.

"Great models are only half the battle. The future of AI will be defined by how we manage and orchestrate their knowledge. We need a common language for context." - Ashish Gore

If you're building complex agentic systems and facing the challenges of context management, I'd love to hear your thoughts. Feel free to connect via my contact information.