In industries like finance and healthcare, the stakes for AI are immense. A flawed model could lead to discriminatory loan practices or incorrect medical diagnoses—outcomes with severe financial and human costs. Consequently, regulators demand more than just high accuracy; they require transparency, fairness, and accountability. The "black box" approach is a non-starter. This is where the pillars of trustworthy AI—Governance, Explainability (XAI), and Auditing—become essential.

The Three Pillars of Trustworthy AI

1. AI Governance: The Strategic Framework

AI Governance is the comprehensive system of rules, policies, and processes that guide AI development and deployment. It establishes clear lines of accountability and integrates with existing frameworks like Model Risk Management (MRM). Key components include maintaining a model inventory, defining risk tiers for different AI systems, and establishing ethical review boards to oversee high-impact projects.

2. Explainable AI (XAI): Opening the Black Box

Explainable AI (XAI) provides tools to understand *why* a model made a specific decision. This is critical for debugging, ensuring fairness, and proving to regulators that decisions are not based on protected characteristics like race or gender. Popular frameworks like SHAP and LIME can dissect complex models and attribute outcomes to specific input features.

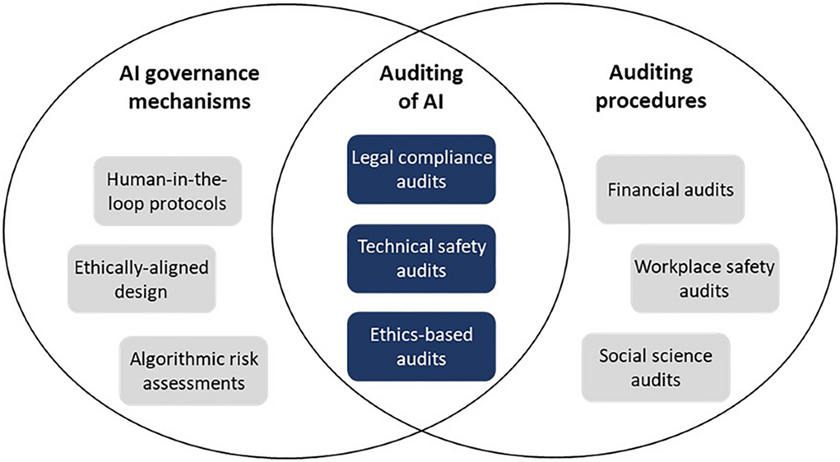

3. AI Auditing: Verifying Compliance and Performance

An AI audit is a systematic evaluation of an AI system against internal policies and external regulations. Auditors examine the entire lifecycle—from data sourcing and feature engineering to model performance and deployment monitoring—to check for bias, security vulnerabilities, and robustness. The goal is to create a transparent, verifiable record that can be presented to regulators.

XAI in Practice: A Code Example with SHAP

One of the most powerful XAI tools is SHAP (SHapley Additive exPlanations). It uses game theory to fairly attribute the impact of each feature on a model's prediction. The following code demonstrates how to explain a credit default prediction, showing which factors pushed the model toward its decision for a single applicant.

# A practical example of using SHAP for model explainability

import shap

import pandas as pd

from sklearn.ensemble import GradientBoostingClassifier

# 1. Load sample credit risk data

data = {'income': [50000, 120000, 30000, 75000],

'credit_score': [650, 780, 590, 720],

'loan_amount': [10000, 5000, 15000, 8000],

'default': [1, 0, 1, 0]}

df = pd.DataFrame(data)

X = df[['income', 'credit_score', 'loan_amount']]

y = df['default']

# 2. Train a model (e.g., a "black box" like Gradient Boosting)

model = GradientBoostingClassifier().fit(X, y)

# 3. Create a SHAP explainer

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X)

# 4. Explain a single "high-risk" prediction (the third applicant)

print(f"Explaining prediction for applicant with features: {X.iloc[2].to_dict()}")

print(f"Base value (average model output): {explainer.expected_value:.2f}")

print(f"SHAP values (feature contributions): {shap_values[2]}")

# A positive SHAP value for a feature increases the prediction (risk of default).

# A negative value decreases it.

This output allows an auditor or loan officer to see that the low credit score and high loan amount were the primary drivers for the high-risk prediction, providing a clear, defensible explanation.

The Auditing Process in Practice

A mature AI auditing workflow in a regulated company typically involves:

- Risk Tiering: Classifying models based on their potential impact. A diagnostic tool is high-risk; a marketing chatbot is low-risk.

- Pre-Deployment Audit: An independent team reviews the model's documentation, fairness metrics (e.g., demographic parity), and robustness tests before it goes live.

- Continuous Monitoring: Post-deployment, the model's performance, data inputs, and predictions are logged and tracked for drift or unexpected behavior. XAI reports are generated automatically for high-impact decisions.

- Periodic Reviews: The entire system is re-audited annually or when significant changes are made to the model or its operating environment.

Conclusion

In finance and healthcare, deploying AI is not just a technical challenge—it's a regulatory and ethical one. Building a robust foundation of governance, leveraging XAI tools for transparency, and conducting rigorous audits are non-negotiable. This three-pronged approach is the only sustainable path to harnessing the power of AI while maintaining trust and ensuring compliance in high-stakes environments.

"In regulated AI, the right answer is not enough. You must also be able to prove how you got it. That's the essence of trust." - Ashish Gore

If your organization is navigating the complexities of AI compliance, feel free to reach out through my contact information to discuss governance strategies.