The rise of AI agents has transformed how we build intelligent applications. In 2025, two frameworks—LangChain and LangGraph—are leading the way in orchestrating reasoning workflows for LLM-powered systems.

LangChain: The Foundation

LangChain remains one of the most popular frameworks for chaining together prompts, APIs, and tools. Its modular approach allows developers to:

- Build conversational AI systems with memory and context.

- Integrate external tools and APIs easily.

- Leverage vector stores for Retrieval-Augmented Generation (RAG).

from langchain import OpenAI, LLMChain, PromptTemplate

llm = OpenAI(model="gpt-4o")

prompt = PromptTemplate.from_template("Translate {text} to French")

chain = LLMChain(llm=llm, prompt=prompt)

print(chain.run("How are you?"))LangGraph: Next-Gen Orchestration

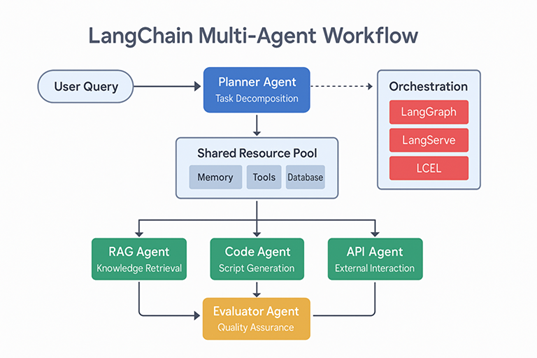

LangGraph builds on top of LangChain, introducing a graph-based execution model. Instead of linear chains, you can now design agent workflows as directed graphs, giving more control over branching, retries, and monitoring.

Why LangGraph?

- Visualize and debug agent workflows.

- Define conditional execution paths.

- Enable parallel tool calls for efficiency.

from langgraph.graph import StateGraph

workflow = StateGraph()

workflow.add_node("search", search_tool)

workflow.add_node("summarize", summarization_tool)

workflow.add_edge("search", "summarize")

result = workflow.run({"query": "AI in healthcare"})

print(result)Combining LangChain and LangGraph

The real power comes when you combine the two. LangChain provides the building blocks (chains, memory, tools), while LangGraph manages orchestration and flow control.

Example Use Case: Research Assistant

- Use LangChain for RAG-based knowledge retrieval.

- Pass results into a LangGraph workflow for reasoning and summarization.

- Deliver structured answers with sources to the end-user.

Applications in 2025

- Enterprise Knowledge Systems: Automating research across internal documentation.

- Healthcare: Assisting doctors with evidence-based summaries.

- Education: Building personalized tutoring agents.

- Productivity: Orchestrating multi-step workflows like scheduling, summarizing, and reporting.

Best Practices

- Start with LangChain for core components, then scale with LangGraph.

- Implement logging and observability for debugging complex graphs.

- Optimize workflows with caching and parallel execution.

- Continuously evaluate performance against benchmarks.

Conclusion

As AI agents evolve, LangChain and LangGraph will remain essential for building intelligent, reliable, and scalable systems. By mastering both, developers can design applications that go beyond simple chatbots—creating agents that think, reason, and act.

"LangChain and LangGraph are redefining how AI agents are built, moving from simple scripts to orchestrated reasoning systems." - Ashish Gore

If you’d like to explore AI agent development with LangChain and LangGraph, feel free to reach out through my contact information.